A common and highly effective pattern is to use Kafka as the event stream and Redis as the fast-access key-value store. This setup allows you to react to state changes in real-time and provide instant lookups without hammering your primary database.

How It Works:

A Kafka Consumer subscribes to a compacted topic (for example, user-state).

For every incoming event:

- It processes the message.

- Updates Redis with the new key-value pair (e.g.,

user_id -> serialized_data).

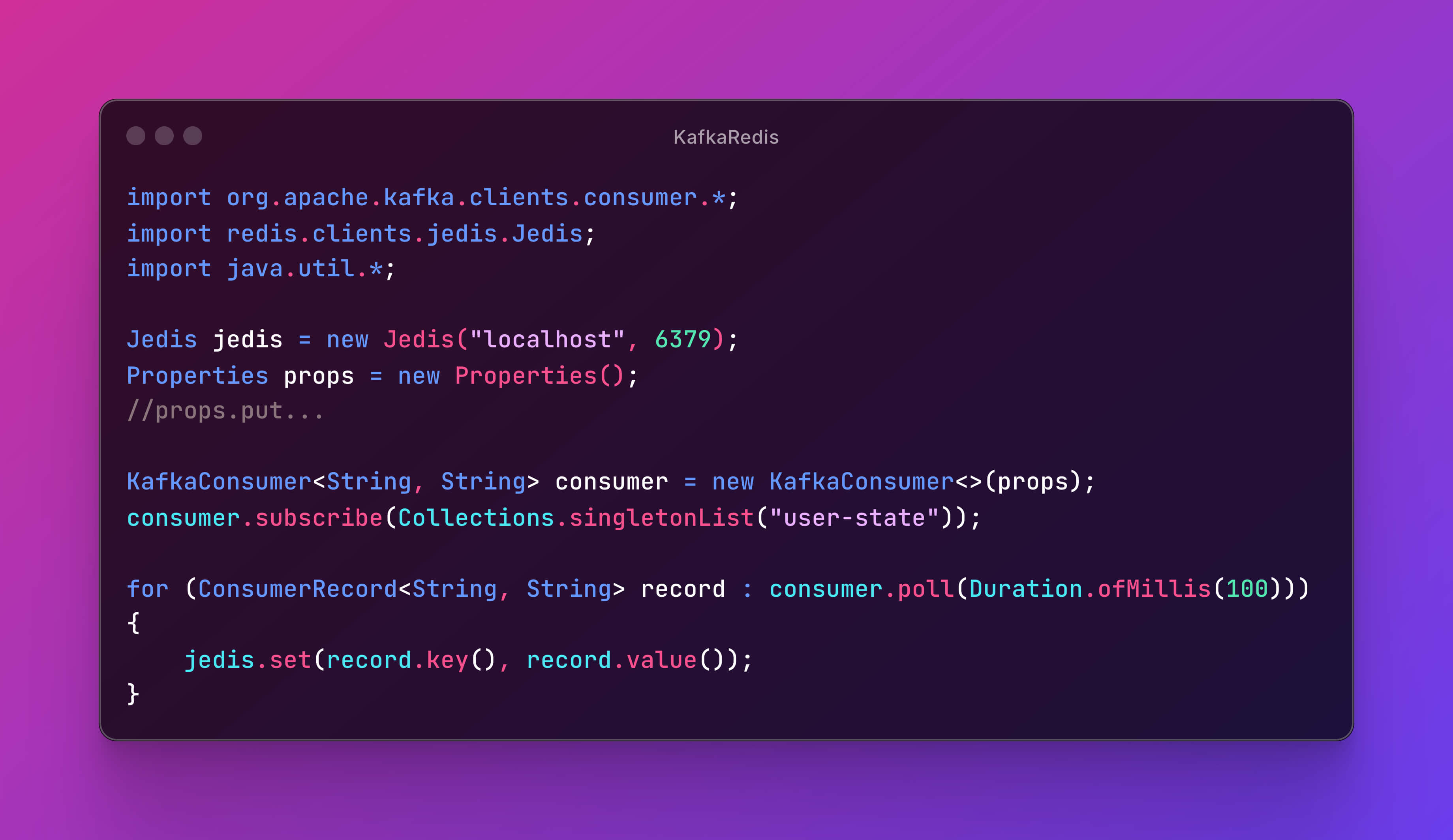

Here’s an example in Java:

import org.apache.kafka.clients.consumer.*;

import redis.clients.jedis.Jedis;

import java.util.*;

Jedis jedis = new Jedis("localhost", 6379);

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("group.id", "cache-sync");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("auto.offset.reset", "earliest");

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);

consumer.subscribe(Collections.singletonList("user-state"));

for (ConsumerRecord<String, String> record : consumer.poll(Duration.ofMillis(100))) {

jedis.set(record.key(), record.value());

}

Benefits:

- Redis delivers sub-millisecond reads, perfect for high-frequency lookups.

- Kafka acts as the source of truth, holding the event history and ensuring resilience.

- Rehydrating the Redis cache is simple: just restart the consumer with

auto.offset.reset=earliest.

Use Cases:

- User profiles

- Configuration settings

- Device states

- Any frequently read data that changes infrequently

This setup is scalable, reactive, and simplifies the architecture for systems that need fast access to changing data without compromising on consistency.